Lorentz-FitzGerald contraction

also called space

contraction, in relativity physics, the shortening of an object along the

direction of its motion relative to an observer. Dimensions in other directions

are not contracted. The concept of the contraction was proposed by the Irish

physicist George FitzGerald in 1889, and it was

thereafter independently developed by Hendrik Lorentz of The Netherlands. The Michelson-Morley experiment

in the 1880s had challenged the postulates of classical physics by proving that

the speed of light is the same for all observers, regardless of their relative

motion. FitzGerald and Lorentz

attempted to preserve the classical concepts by demonstrating the manner in

which space contraction of the measuring apparatus would reduce the apparent

constancy of the speed of light to the status of an experimental artifact.

In 1905 the

German-American physicist Albert Einstein reversed the classical view by

proposing that the speed of light is indeed a universal constant and showing

that space contraction then becomes a logical consequence of the relative

motion of different observers. Significant at speeds approaching that of light,

the contraction is a consequence of the properties of space and time and does

not depend on compression, cooling, or any similar physical disturbance. See

also time dilation.

Lorentz transformations

set of equations in

relativity physics that relate the space and time coordinates of two systems

moving at a constant velocity relative to each other. Required to describe

high-speed phenomena approaching the speed of light, Lorentz

transformations formally express the relativity concepts that space and time

are not absolute; that length, time, and mass depend on the relative motion of

the observer; and that the speed of light in a vacuum is constant and

independent of the motion of the observer or the source. The equations were

developed by the Dutch physicist Hendrik Antoon Lorentz in 1904. See also

Galilean transformations.

Michelson-Morley

experiment

an attempt to detect the

velocity of the Earth with respect to the hypothetical luminiferous

ether, a medium in space proposed to carry light waves. First performed in

Berlin in 1881 by the physicist A.A. Michelson, the test was later refined in

1887 by Michelson and E.W. Morley in the United States.

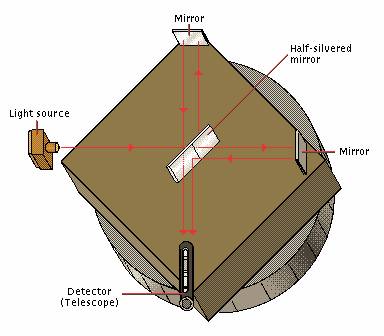

The procedure depended

on a Michelson interferometer, a sensitive optical device that compares the

optical path lengths for light moving in two mutually perpendicular directions.

It was reasoned that, if the speed of light were constant with respect to the

proposed ether through which the Earth was moving, that motion could be

detected by comparing the speed of light in the direction of the Earth's motion

and the speed of light at right angles to the Earth's motion. No difference was

found. This null result seriously discredited the ether theories and ultimately

led to the proposal by Albert Einstein in 1905 that the speed of light is a

universal constant.

The Michelson

interferometer consists of a half-transparent mirror that divides a light beam

into two equal parts (A and B), one of which is transmitted to a fixed mirror

and the other of which is reflected to a movable mirror. The interferometer is

turned so that half beam A is oriented parallel to the Earth's motion and half

beam B is perpendicular to it.

MICHELSON-MORLEY

EXPERIMENT

Michelson-Morley Apparatus

In 1887 Albert Michelson and Edward Morley measured the

speed of the earth with respect to the ether, a substance postulated to be

necessary for transmitting light. Their method involved splitting a beam of

light so that half went straight ahead and half went sideways. If the apparatus

(attached to the earth) moved relative to the ether, then light going in one

direction should travel at a different speed than light going in the other,

just as boats going downstream travel faster than boats going across. No

evidence of a difference in speed was found, however, which led not only to the

demise of the ether theory, but to the development of the Special Theory of

Relativity by Albert Einstein 18 years later.

Historically, the

best-known interferometer is the one devised about 1887 by the American

physicist Albert Michelson for an experiment he conducted with the American

chemist Edward Morley. The experiment was designed to measure the absolute

motion of the earth through a hypothetical substance called the ether,

erroneously presumed to exist as the carrier of light waves. Were the earth

moving through a stationary ether, light traveling in

a path parallel to the earth's direction of motion would take longer to pass

through a given distance than light traveling the

same distance in a path perpendicular to the earth's motion. The interferometer

was arranged so that a beam of light was divided along two paths at right

angles to each other; the rays were then reflected and recombined, producing

interference fringes where the two beams met. If the hypothesis of the ether

were correct, as the apparatus was rotated the two beams of light would

interchange their roles (the one that traveled more

rapidly in the first position would travel more slowly in the second position),

and a shift of interference fringes would occur. Michelson and Morley failed to

find such a shift, and later experiments confirmed this. Today the propagation

of electromagnetic waves through empty space has replaced the concept of the

ether.

Interferometer

INTRODUCTION

Interferometer,

instrument that utilizes the phenomenon of interference of light waves for the ultraprecise measurement of wavelengths of light itself, of

small distances, and of certain optical phenomena. Because the instrument

measures distances in terms of light waves, it permits the definition of the

standard meter in terms of the wavelength of light (see Metric System).

Many forms of the

instrument are used, but in each case two or more beams of light travel

separate optical paths, determined by a system of mirrors and plates, and are

finally united to form interference fringes. In one form of interferometer for

measuring the wavelength of monochromatic light, the apparatus is so arranged

that a mirror in the path of one of the beams of light can be moved forward

through a small distance, which can be accurately measured, thus varying the

optical path of the beam. Moving the mirror through a distance equal to

one-half of the wavelength of the light causes one complete cycle of changes in

the pattern of interference fringes. The wavelength is calculated by measuring

the number of cycles caused by moving the mirror through a measured distance.

II USES

When the wavelength of

the light used is known, small distances in the optical path can be measured by

analyzing the interference patterns produced. This technique is used to measure

the surface contours of telescope mirrors. The refractive indices of substances

are also measured with the interferometer, the refractive index being

calculated from the shift in interference fringes caused by the retardation of

the beam. The principle of the interferometer is also used to measure the

diameter of large stars, such as Betelgeuse. Because modern interferometers can

measure very tiny angles, they are further used—again, on such nearby giants as

Betelgeuse—to gain images of actual brightness variations on the surfaces of

such stars. This technique is known as speckle interferometry.

The interferometer

principle has also been extended to other wavelengths, and it is now widely

employed in radio astronomy.

Microsoft®

Encarta® Reference Library 2003. © 1993-2002 Microsoft Corporation. All rights

reserved.